Anatomy of Windows Programs

Running Programs - A brief history

I don't know whether you have wondered what makes programs tick, when you launch an application what actually happens? Also have you wondered why more and more disk space seems to be taken up with Windows updates? This series of pages introduces you to the intricacies of what's involved.

When programs were first developed they ran on systems with a ridiculously small amount of memory. 32Kbytes was a large system memory! In this environment every byte counted and there was absolutely no space for anything that was not actually essential. Compare this with Windows 10® systems now, with a recommended memory size of 8Gb that's 100,000 times bigger. Vista uses a lot of this memory on a 'just in case' basis - when you want to do something it is more than likely that the code/data is already in memory. Back in the 1970s programs tried to save on memory usage by creating memory 'overlays'. A program could overwrite parts of itself with new sections of code just to economize on the total memory space needed at any one time. The earliest days also saw programs that took over the whole computer - once loaded it was in full control of I/O, memory. Then along came Operating Systems (O.S.) that started with basic capabilities such as managed multiple programs without needing to reboot the whole machine every time a program was run. It loaded a program, ran it and when it exited, the O.S. tidied up and allowed the next program to run. This was a novelty, precious memory was taken up with not just the program you want to run but an Operating System as well.

Over the years the Operating Systems have done more and more on behalf of programs - managing shared devices like printers and managing accesses to files stored on a disc.

Libraries

Most applications need to do more or less the same kind of thing. From the earliest days when computers principally solved mathematical problems it made sense to keep libraries of 'useful' functions for programs to share. In these days these functions were often mathematical such as trigonometric functions or finding solutions to partial differential equations. The earliest form of library were actually lengths of paper tape that were physically spliced into a program where they were needed.

When an application used a library you needed to specify the library and ensure it was linked into the program code. The linker would work out which particular functions you needed from the library and only include these into the executable image for the program. The 'static library' file was born.

Quite apart from re-using shared code for computations it was also useful to use libraries to ask for services from the Operating System - opening and reading a file for example. This a big deal as it insulates a program from needing to know the details of the way data is stored on discs. The Operating System turned into a set of function libraries for programs to make use of.

Microsoft® Windows®

When Microsoft® developed Windows® for the earliest PCs a memory size of 256Kb was generous and so from the outset it was important to keep memory requirements as low as possible. The last thing they needed were lots of applications containing copies of identical 'library' code. It was this in mind that the concept of Dynamic Link Libraries (or DLLs) was invented. The system consisted of a number of these DLLs. Splitting it up into separate libraries means only a few DLLs need to be loaded into memory at one time. When an application loaded it did not include copies of the shared code anymore it 'dynamically' linked into the system libraries at run time rather than at compile time. It was therefore possible to create very small applications as the code in the system DLLs did much of the work. To implement this an application program has to declare the DLLs that it needs to load, embedded somewhere within the program image. When the program is run the system will know to load up these libraries and link them in. As many applications use the same functions system DLLs can be kept in memory and shared between different programs, greatly economizing on memory required. They need their own data space but the code is 'read only' and so can be freely shared.

Apart from saving valuable memory space this approach has the advantage that an application is 'insulated' from the system. It can run on different makes of PC with different hardware. The system will have different DLLs and drivers to cope with the different devices for an individual PC but the interface presented to the application is just the same. Opening a file on a floppy disc, a CD or a hard disc can be just in the same way as far as the program is concerned.

We are so used to the concept that applications on one computer 'should' run on another that it is easy to forget that it was not always this way. In the bad old days of Operating Systems you could only be sure that an application would run on an identical system (both hardware and software). Quite often an Operating System upgrade or the installation of a new type of disk drive meant you needed to amend and rebuild the applications to make use of it. It was the software development cost of this continual maintenance that helped kill off these other Operating Systems. Windows is remarkably good at running 'old' software. I have a MSDOS® application from 1991 that I can still run on XP® and it does just the same thing and a 16bit Windows application from 1992 that I still use quite frequently.

At the technical level there are actually two 'abstraction levels' at work here. Firstly there is 'HAL' - the Hardware Abstraction Layer that is the key to keeping Windows generic to the hardware devices you connect to it. All devices have to provide the same driver interface to Windows. This is what lets you replace printers or graphics cards from different manufacturers and yet all applications do not need to know a change has been made - they can use a generic 'printer' and 'display'. Secondly there is another 'user interface' abstraction layer. Windows provides the mechanism for displaying edit boxes, dialog boxes and the application just requests 'put a check box here' without knowing the details. Windows can then provide different implementations of a 'check box' without the application knowing or caring about which one is used. The user interface may look rather different on different computers or different versions of Windows but the application itself does not need to change.

DLL Hell

About ten years ago there was a major issue with how Windows was working. Microsoft was issuing quite a few fixes to Operating Systems and also to their development tools (principally Microsoft Visual C++). Unfortunately these led to a number of released products failing to work after the changes had been applied. This became known as DLL Hell, as the root cause was the sharing of DLLs.

If you just add new functions or extend what a function does you should be able to issue a new version that will support all the software that already uses the previous version of the DLL. But the number of parameters and parameter types on an existing function can not be so easily changed - the application would need to be modified and rebuilt to supply the changed parameters.

One good reason that these problems occurred was that developers would try to find an ingenious workaround for a known bug in Windows. To get a program working as required, the developers had little choice but to find a way around the problem, taking account of the way it went wrong. But when the problem eventually gets solved the software may now fail because it relied on the presence of the original bug. At the time this seemed a reasonable strategy for a software developer when faced with a problem they need to quickly workaround. The end-user ended up with an application that worked, but when they upgraded the Operating System the application would fail.

Why did this happen in Windows? Mainly because of its success, lots of developers were writing software for it. In other Operating Systems it tended to be only the computer manufacturer who wrote the software. The other reason was that Windows was designed on the basis of trust rather than distrust. It may seem naive now, but in those days a developer was trusted to write well-behaved software. A bug in an application would more than likely crash the whole of Windows and need a reboot. An application, believe it or not, had to willingly suspend what it was doing at regular time intervals (calling a function 'Yield') to allow other programs to run, if it did not call this function other programs would not get a look-in until the current application had terminated. When you wrote a Windows application you were trusted to be 'fair' to every other program on the system. This is indeed an architecture that needs to work on trust.

MFC Hell

In these days the main Windows application development language used was C++ and Microsoft pushed their own class library to simplify Windows programming. This was called MFC (for Microsoft Foundation Classes) and implemented a thin skin of wrapper functions around the Windows API. This was also the time that everyone was migrating from 16 to 32 bit windows and using a library helped insulate the developers from the specifics of one system or the other. This made it fairly easy to write source code that could be used on either 16bit (Windows 3.1) or 32bit (Windows95 or NT). Microsoft went through a phase of issuing new versions of the MFC DLLs at frequent intervals with new features and fixes. So due to these changes there was lots of broken applications and annoyed customers and developers. If you take the Microsoft perspective on this, the chief problem was that developers had a nasty habit of overwriting the shared MFC DLL in the Windows system folder with the version of the DLL they needed for their application - irrespective of whether their version was older than the one currently installed. There was no standard installation tool at the time so many developers just used a brutal 'copy' without checking which versions were involved. Another installation issue was that often the MFC DLL was currently in use by other applications - including Windows itself, so if you wanted to replace it with an upgrade then the system needed to be rebooted. At this time it was quite possible to need to reboot the PC five times to just install one piece of software, as it forced a reboot to occur each time it detected a system DLL that it needed to update.

There were two clear ways out of this:

1. Give a different name to the DLL different each time a new one is issued, so an application would make sure the named version is installed. But this approach will litterthe system with lots of similar software and all applications need to include their version of 'so-called' shared software in their installation package. If you have wondered why there is a ubiquitous DLL mfc42.dll this is because MFC version 4.2 was the stage they gave up on this approach.

2. Always put a copy of the version of MFC you want in the application's installation folder. The application then uses this one rather than the one in the system folder. Same problem - lots of copies of potentially identical DLLs and bloated installation download sizes.

So the 'DLL' that started out as a brilliant time and space saving idea led to a lot of people being fed up with Windows - crashes, reboots on installs, updates...

The Road from Hell

How could all this be sorted out? As ever, Microsoft wanted to allow both old and new programs to run on an updated version Windows so they could not adopt a totally new approach - stopping all existing applications from working. It needed mechanisms to support different versions of components and to stop rogue software from changing system components.

Protecting the System

With the release of WindowsXP Microsoft incorporated a scheme to look after the contents of the system folder. If some application overwrites a system component it could offer a rollback to the previous approach and also warn the user. With Vista this has gone a step further - you need to run as an administrator and the user has at some stage to specifically agree to change key system components and the system registry.

Clearly a change of approach had been needed. Rather than an application feeling the need to update the system on behalf of a user, an upgrade of anything within Windows should be done only by a Microsoft tool: Windows Update. If the user hasn't got the system version that the application needed then the application installer must not try to update the system for them.

The malicious tool finder that forms part of Windows Update is also part of this process. It double-checks that the system DLLs have not been tampered with, it does this by checking the signature of the file. If the file has been modified the signature will change and will not match its original value.

Delayed load

Apart from DLL Hell, the main issue that has hit Windows programs is that they threw themselves into the DLL world in too big a way. A program could reference dozens of DLLs that all needed to be loaded before it could be run. This can give an embarrassingly long delay before anything happens. Users are very sensitive to load time as they have decided to run an application and want to start using it a.s.a.p. This is a rather sad state of affairs because the program doesn't need all those DLLs immediately, in fact depending on what the user does, it may never actually need to load some of the DLLs. To get around this Microsoft introduced delayed load DLLs. Not often used, this approach allows a program to put off the loading of DLLs until it actually needs them and so reducing the agonizing delay when a program starts up. If you've wondered why programs display a version 'splash' screen this is another way to disguise the time taken while it gets ready for user input.

Viewing Programs with InspectExe

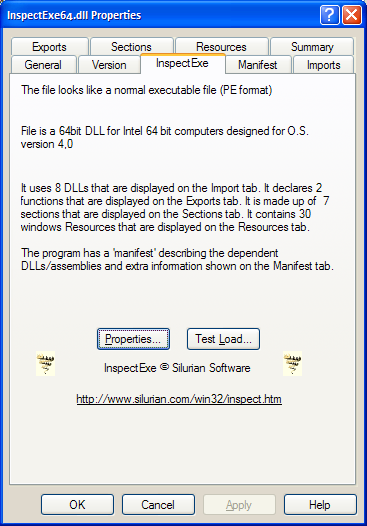

InspectExe lets you find out all about a Windows program, here is just one of the information pages it can display :

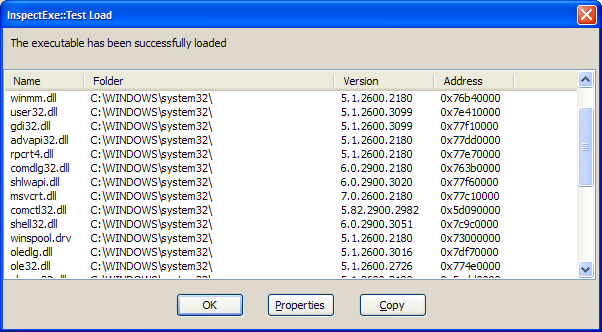

It also lets you 'test' loading of a program so you can see which DLLs are loaded and in what order :